6.4 Exercises

In this question we will prove part 1. Theorem 6.1.

Define \[ b_{ij}=(\mathbf x_i-\bar{\mathbf x})^\top (\mathbf x_j-\bar{\mathbf x}), \quad i,j=1, \ldots , n, \] and write \(\mathbf B=(b_{ij})_{i,j=1}^n\). Prove that \[\mathbf B=(\mathbf H\mathbf X)(\mathbf H\mathbf X)^\top,\] where \(\mathbf H\) is the \(n \times n\) centering matrix and \(\mathbf X= (\mathbf x_1, \ldots , \mathbf x_n)^\top\) is the data matrix.

Show that \(\mathbf B\) is positive semi-definite, i.e., explain why, for all \(n \times 1\) vectors \(\mathbf a\), \[ \mathbf a^\top \mathbf B\mathbf a=\mathbf a^\top (\mathbf H\mathbf X) (\mathbf H\mathbf X)^\top \mathbf a\geq 0. \]

In this question we will prove part 2. of Theorem 6.1

Prove Property 7. of Section 2.4. Specifically: if \(\mathbf A\) is a symmetric \(n \times n\) matrix, show that \[\mathbf B= \mathbf H\mathbf A\mathbf H\] has elements given by \[ b_{ij}=a_{ij}-\bar{a}_{i+}-\bar{a}_{+j}+\bar{a}_{++}, \qquad i,j=1, \ldots , n, \] where

\[\bar{a}_{i+}= \frac{1}{n} \sum_{j=1}^n a_{ij}, \quad \bar{a}_{+j}=\frac{1}{n} \sum_{i=1}^n a_{ij}, \quad\mbox{and} \quad\bar{a}_{++} = \frac{1}{n^2} \sum_{i,j=1}^n a_{ij}.\]Assume that \(\mathbf B\) is positive semi-definite with \(k\) strictly positive eigenvalues and let \[ \mathbf B= \sum_{j=1}^k \lambda_j \mathbf u_j\mathbf u_j^\top =\mathbf U\boldsymbol \Lambda\mathbf U^\top, \] where \(\boldsymbol \Lambda=\text{diag}\{\lambda_1, \ldots , \lambda_k\}\) and \(\mathbf U\) is \(n \times k\) and satisfies \(\mathbf U^\top \mathbf U=\mathbf I_k\). Now define a ‘new’ \(n \times k\) data matrix \[ \mathbf X=[\mathbf x_1, \ldots , \mathbf x_n]^\top=\mathbf U\boldsymbol \Lambda^{1/2}. \] Show that \(b_{ij}=\mathbf x_i^\top \mathbf x_j\) for all \(i,j=1, \ldots , n\).

Hint: check that for \(\mathbf X\) defined as above, \(\mathbf X\mathbf X^\top =\mathbf B\).We now need to show that \(\mathbf D=(d_{ij})\) represents the set of inter-point distances for this new data matrix. Recall that \(a_{ij}=-d_{ij}^2/2\). Deduce that \[ (\mathbf x_i -\mathbf x_j)^\top (\mathbf x_i-\mathbf x_j)= b_{ii} + b_{jj}-2b_{ij}; \] and so, using the first part of this question, show that \[ (\mathbf x_i -\mathbf x_j)^\top (\mathbf x_i-\mathbf x_j)=-2a_{ij}=d_{ij}^2. \] Hence the new inter-point distances are the same as the original ones.

Suppose \(\mathbf X\) is a \(n \times p\) column centred data matrix. PCA looks at the spectral decomposition of the covariance matrix \(\frac{1}{n}\mathbf X^\top \mathbf X\), whereas MDS uses the spectral decomposition of the Gram matrix \(\mathbf X\mathbf X^\top\). Using the SVD for \(\mathbf X=\mathbf U\boldsymbol{\Sigma}\mathbf V^\top\), explain the relationship between these two methods.

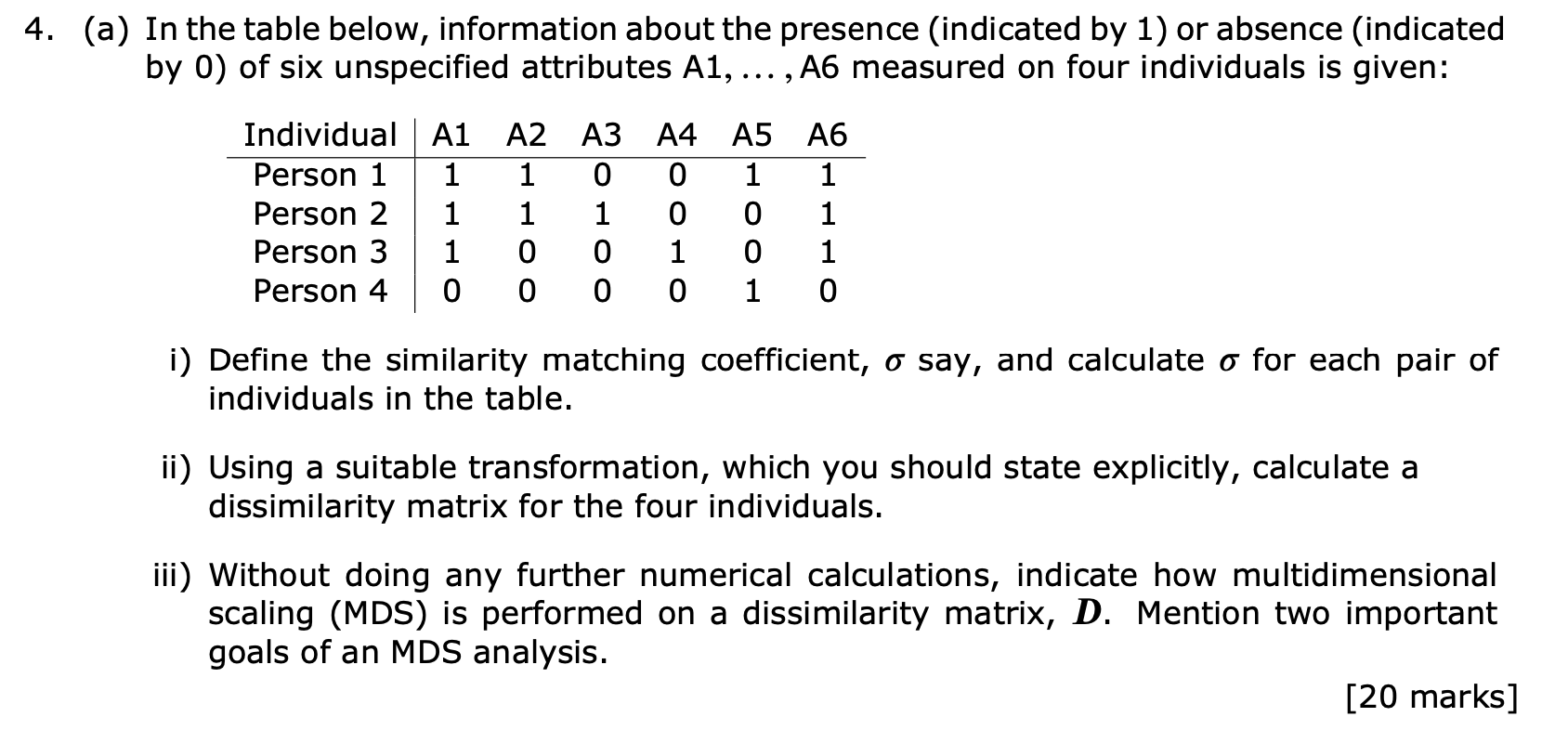

- Answer Q4 part (c) from the 2018-19 exam paper.

- Answer Q4 part (a) from the 2017-18 exam paper. Here, the ‘similarity matching coefficient’ is what we called the simple matching coefficient (SMC) in the notes.